- Home

- Security, Compliance, and Identity

- Microsoft Sentinel Blog

- New ingestion-SampleData-as-a-service solution, for a great Demos and simulation

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Demonstrating Microsoft Sentinel features, that include security incidents, alerts, workbooks, meaningful hunting queries,

For any SIEM solution, good demos and simulations rely on predictable ingested data.

Our new Sentinel ingestion-SampleData-as-a-service uses Azure monitor new API to ingest and manipulate raw events into Sentinel instances.

This tool provides a simple way to ingest sample data at one time or in a scheduled manner into a built-in table or a custom table. it accepts log files (JSON or CSV) hosted on GitHub public repositories or Azure Storage accounts (with SAS key protection).

Users can also transform these logs before they're sent to the destination table with this solution.

We can use this solution to ingest data on demand into the above-mentioned tables:

- SecurityEvent

- Syslog

- WindowsEvent

- CommonSecurity

- ASimDnsActivityLogs

- Custom tables

This tool can be used to address the following business use cases:

- Detection simulation – The detection engine in Microsoft Sentinel uses KQL query logic after the raw events are ingested into the system. If it finds a match, it creates an incident and an alert. Through this new tool, detection engineers will be able to ingest security data and apply transformation to control the entities and fields that will be exposed in the detection. The tool will also be used to test the built-in detection and the newly created detection.

- Demo Lab with live incidents and Workbooks – In order to demonstrate Sentinel functionality and train the SOC on investigation procedures and Sentinel features, both customers and partners need a live demo environment with continuously updated incidents and workbooks. using this tool we can ingest data that will trigger incidents in a schedule manner that customers can use to build demo scripts.

- End2End testing for sentinel functionality – In addition, SIEM engineers can build monitoring around different product features by ingesting expected data into the system as part of schedule management.

We can test the following scenarios:

Delays in log ingestion

Functionality of the analytical rule engine

Creation of incidents

Scenario for automation (add automation role when incident and alert are created).

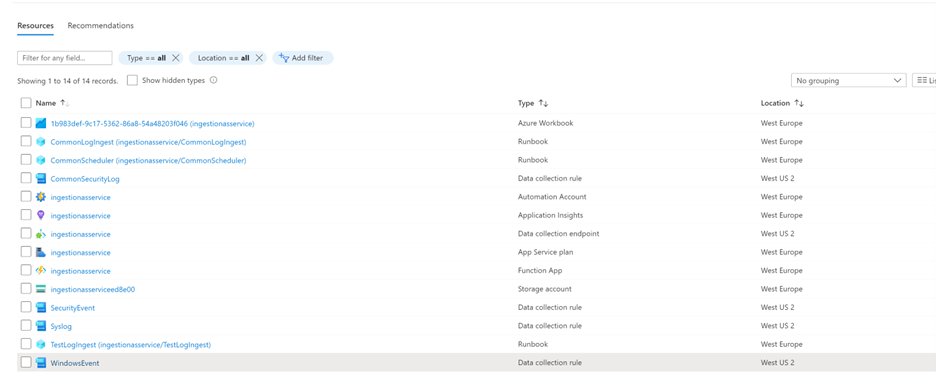

Solution components

- Presentation layer – we use Azure Workbook. The user will point to the input file here and define the transformation.

- Ingestion engine - we use Azure automation accounts, with 3 different runbooks.

- Schema management- Azure functions are used as parser helpers to create a list of fields to replace.

- Data collection rules - as part of the solution deployment, we create four DCRs (Data collection rules) for every built-in table, and if users choose to ingest data into custom tables, we create the DCRs on ingestion time and delete them after.

Deploying the Solution

To deploy this solution, logic with user with deployment permission and navigate to this GitHub repository and press Deploy.

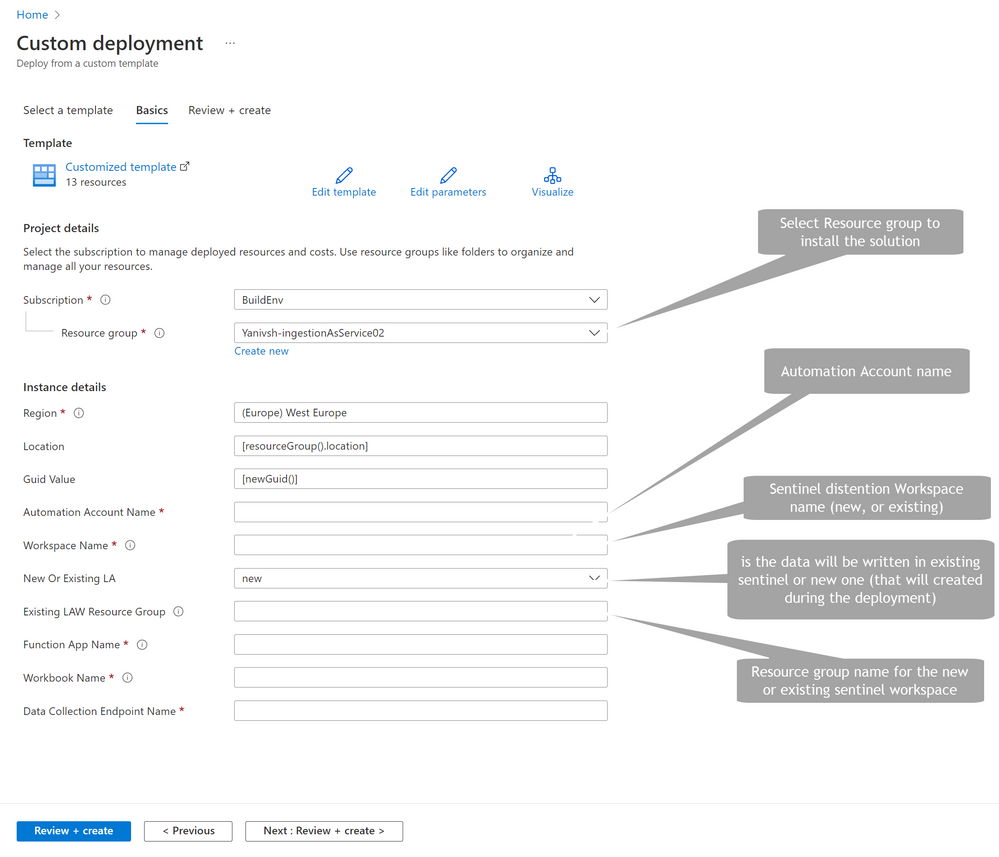

On the Azure template deployment page review the above for inputs properties:

As soon as the installation is complete, enter the relevant resource group and review the new resources.

Follow the above diagram during the post-deployment phase to assign permissions to the two managed identities. Please note that the permission assignment may change if the solution is deployed in a different resource group than the target sentinel.

This is the post deployment needed permission:

|

Identity Type |

Permission |

Scope |

|

|

|

|

|

Automation account Manage Identity |

Automation Contributor |

Workspace resource group |

|

Automation account Manage Identity |

Log analytics Contributor |

Workspace resource group |

|

Automation account Manage Identity |

monitoring analytics Contributor |

Workspace resource group |

|

Automation account Manage Identity |

monitoring metrics publisher |

Solution RG |

|

Azure function Manage Identity |

Reader |

Workspace resource group |

We are ready to ingest some sample data!!

How to use the Tool:

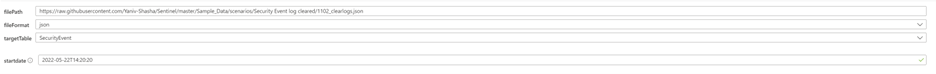

When we open the workbook and approve the trusted zone notification, we see the above input properties. These input properties will be discussed in the section above

filePath:

The filePath properties will expect files from public GitHub repository or storage account (can be under SAS key protection)

Example location on GitHub can be https://raw.githubusercontent.com/Yaniv-Shasha/Sentinel/master/Sample_Data/scenarios/Security Event ...

Example for Storage account file input:

FileFormat:

Depending on the input file define: CSV/JSON

TargetTable

Select destination Table.

Please note that input file schema needs to be aligned with the distention table schema to successfully ingest data

- SecurityEvent

- Syslog

- WindowsEvent

- CommonSecurity

- ASimDansActivityLogs

Reference for table schema can be found here Azure Monitor table reference index by category | Microsoft Docs

For custom tables the target table schema can be defined with two options.

- existedSchemaLink fields is not set – the table schema will be created directly from the input file. The downside for this is that all the fields will be created as strings and datatime

- existedSchemaLink is define – user can point the tool to repository with schema files (like the above example Azure-Sentinel/.script/tests/KqlvalidationsTests/CustomTables at master · Azure/Azure-Sentinel (gith... and the tool will create the DCR and the target table directly from this schema file.

Startdate

A startdate (aka TimeGenerated) is an important field, and in this section, we will share the different use cases around it.

- startDate is empty – The solution will use the current datetime.

- User define specific data on the startdate field – the solution only except iso8601 format like (yyyy-ddThh:mm:ss) aka 2022-05-22T14:20:20

** If the user specifies to ingest the data on a schedule, the solution will disregard time-generated fields and push the data at the nearest time, then it will use the schedule.

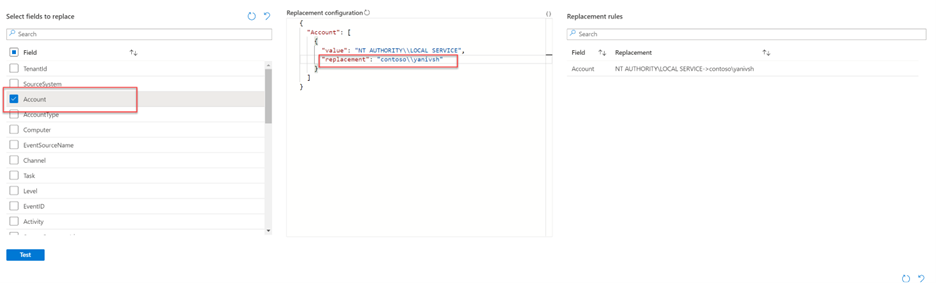

Select fields to replace

Users can overwrite data from the sample file using this option.

By using Azure Monitor's brand-new API, the solution lets users modify values before ingestion occurs.

The user must choose a column in the replace list for the ingestion to begin. If the user does not wish to overwrite a column, they need to select a column and not modify it.

On the above screenshot, select the Account column, and change the replacement value to a brand-new account name.

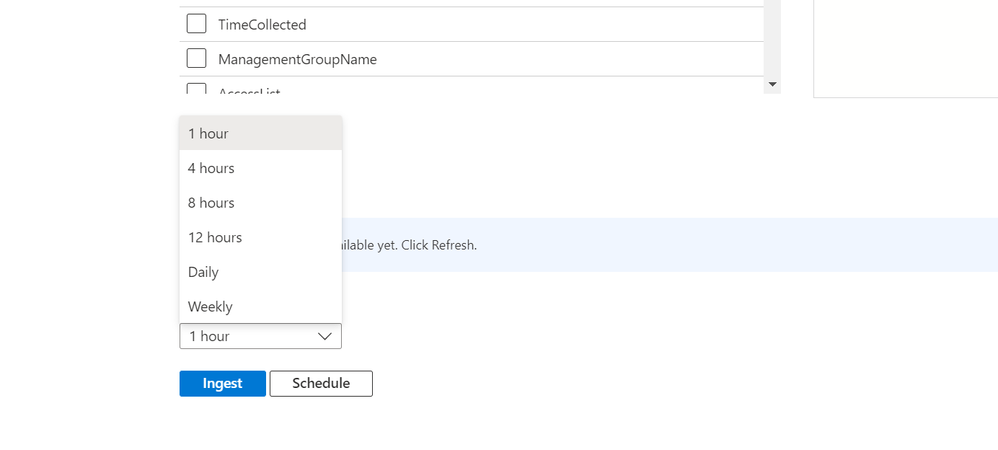

Ingestion scheduling:

One-time or periodic ingestion can be defined by users

1a. - One-time ingestion: The user must press the ingest button

- 1b. Scheduling – The user needs to select the recurring time range and press the schedule button.

Now it's time to simulate sample data and create great demos in Microsoft Sentinel!

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.