- Home

- Content Management

- Microsoft Graph Data Connect for SharePoint Blog

- Four Options for SharePoint Site Analytics

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Overview

Here’s a scenario: imagine that you are a SharePoint Online administrator and you have been asked to provide analytics about how the company is leveraging these resources.

You want to understand:

- How many sites of each type do we have and how much storage do they use?

- How many sites have no new content in the last 12 months?

Well, Microsoft offers you a few options…

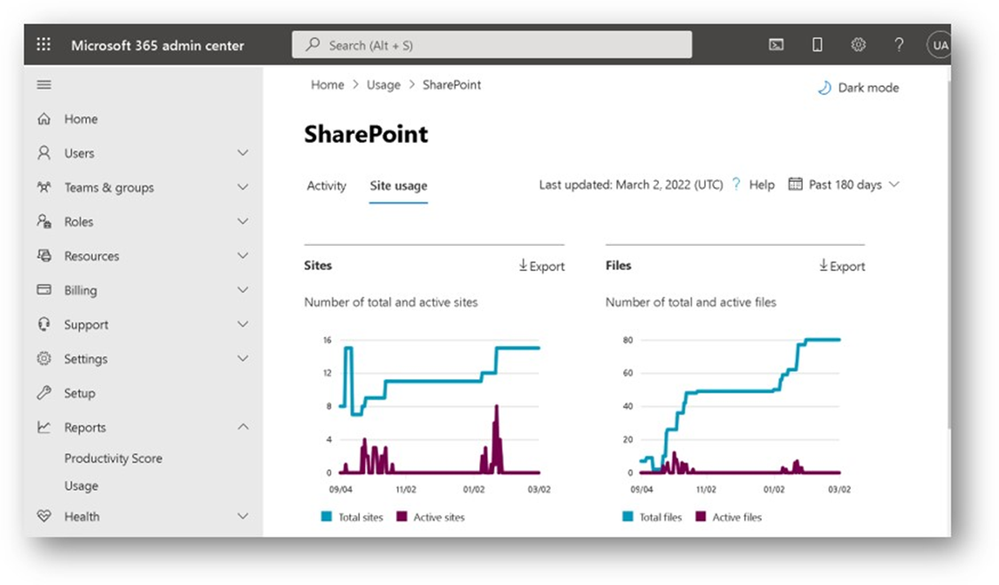

Option 1: Admin Portal Usage Reports

You can get some site information from the Microsoft 365 Admin Portal.

Follow these steps:

- Go to the Admin Portal at https://admin.microsoft.com

- Log on with an administration account

- Expand the “… Show all” option

- Select “Reports” and “Usage”

- In the usage reports page, find the charts for “OneDrive files” and “SharePoint files”

- Click the “view more” button under the “latest number of files in SharePoint”

- In the “Home > User > SharePoint” page, find the two tabs for “activity” and “site usage”

- Select “site usage”

You will see some site-level analytics:

At the bottom of that page, you will find a list of sites that you can export and use in other tools like Excel or Power BI. The download file will be named SharePointSiteUsageDetail, plus the date and time, with a CSV file extension.

If you open the file using Excel, the data will look like this:

You could transpose the data to look at the columns in more detail:

You could also create Excel pivot tables with the data for your sites. For instance, you could get a summary of sites, files, and bytes by Root Web Template:

Notes:

- The data might be a few days old

- There is no simple way to automate the process (run this every day, for instance)

- The user must be granted Admin credentials to gather information about sites

Additional information:

Option 2: PowerShell scripts

Another option is to use PowerShell to get the information. To get started, open a PowerShell prompt and use the Install-Module cmdlet to install the SharePoint module. You can then use the Get-Module cmdlet to confirm that it’s there.

Install-Module -Name Microsoft.Online.SharePoint.PowerShell

Get-Module *SharePoint* | Select Name,Version

Next, you need to connect to SharePoint Online with an administrator account using the Connect-SPOService cmdlet. The URL to use for the connection starts with your tenant’s name followed by “-admin.sharepoint.com”. After you issue the Connect-SPOService cmdlet below, you will need to provide the admin credentials.

Connect-SPOService -url https://archimedes-admin.sharepoint.com

After you are connected, you can get a list of SharePoint Sites using the Get-SPOSite cmdlet. You can pipe the output to a Select to see just a few of the columns and to a Where to filter the list. Make sure to use the option to include ODBs (not included by default) and the option to lift limits (by default it shows only the first 200 sites).

Get-SPOSite -IncludePersonalSite $true -Limit All |

Where StorageUsageCurrent -gt 0 |

Select Title, Template, StorageUsageCurrent |

Sort StorageUsageCurrent -Descending

The Get-SPOSite cmdlet exposes quite a few properties for each site. Here’s a cmdlet to show all the properties for a specific site:

Get-SPOSite -Limit All | Where Title -eq "Archimedes Team Site" | Format-List

There is also a way to export the data from Get-SPOSite to a CSV file so you can use with other tools like Excel or Power BI. Here’s an example.

Get-SPOSite -IncludePersonalSite $true -Limit All |

Export-Csv -Path .\sitelist.csv -Encoding UTF8 -NoTypeInformation

Notes:

- For tenants with many sites, it might take a long time to get all the results

- If the request does take a long time, you can pipe the output to “Out-Host -Paging” to see the results in pages, without having to wait for the whole list to be retrieved to see things

- If there are problems with a long-running request, there is no simple way to resume from where you stopped

- PowerShell requests are subject to the permissions granted to the user running the requests. If the user running the command lack permissions to specific sites, they will not be listed

Additional information:

- Get started with the SharePoint Online Management Shell | Microsoft Docs

- Get-SPOSite (SharePointOnlinePowerShell) | Microsoft Docs

Option 3: Graph API applications

Another way to get a list of sites is writing an application that uses the Graph API. For this one, you will have to setup an application id in Microsoft Entra Id (Azure Active Directory), choose an application development environment and do some basic coding.

To get started, use Microsoft Entra Id (Azure Active Directory) to create an Application Identity. You will need that to access the information from your application.

Once you get that ready, make sure to grant the proper API access to your application. For application permissions, be sure to add “Sites.Read.All” and click on the button to “Grant admin consent” after adding the permission.

To avoid putting credentials in code, store the tenantId, clientId and clientSecret as environment variables for your machine, which you can pull from the code.

After the Application Id and API permissions are set in Azure Active Directory, you should make sure to install the Graph API tooling. There are several ways to do that, depending on the platform and package manager you use.

In my case, I am using C# with .NET Core in Windows. To install the Graph SDK in my case, I needed an elevated PowerShell prompt to run:

Install-Package Microsoft.Graph

Once that is done, you will use Visual Studio to create a new project. To keep things simple, you can select the project template for C# Console App. In that app, we will need to take care of authentication, so your application can request data from SharePoint Online. You will use your application credentials to create your Graph API client and then you can query for sites…

using Azure.Identity;

using Microsoft.Graph;

var scopes = new[] {"https://graph.microsoft.com/.default"};

var tenantId = Environment.GetEnvironmentVariable("tenantId");

var clientId = Environment.GetEnvironmentVariable("clientId");

var clientSecret = Environment.GetEnvironmentVariable("clientSecret");

var options = new TokenCredentialOptions

{ AuthorityHost = AzureAuthorityHosts.AzurePublicCloud };

var clientSecretCredential = new ClientSecretCredential(

tenantId, clientId, clientSecret, options);

var graphClient = new GraphServiceClient(clientSecretCredential, scopes);

var sites = await graphClient.Sites.Request().GetAsync();

foreach (var site in sites) {

Console.WriteLine($"\nSite name: {site.DisplayName}");

Console.WriteLine($"Site URL: {site.WebUrl}");

Console.WriteLine($"Site last modified: {site.LastModifiedDateTime}");

}

Console.WriteLine($"Total sites: {sites.ToList().Count}");

Notes:

- For tenants with many sites, requesting all sites at once will take a long time. You will need to implement a mechanism for paging.

- If there are problems with a long-running request, there is no simple way to resume from where you stopped.

- If you issue too many requests using Graph API, you might be subject to API throttling.

Additional information:

- Install the Microsoft Graph SDK – Microsoft Graph | Microsoft Docs

- Choose a Microsoft Graph authentication provider – Microsoft Graph | Microsoft Docs

- Working with SharePoint sites in Microsoft Graph – Microsoft Graph v1.0 | Microsoft Docs

- Site resource type – Microsoft Graph v1.0 | Microsoft Docs

- Page through a collection using the Microsoft Graph SDKs – Microsoft Graph | Microsoft Docs

- Avoid getting throttled or blocked in SharePoint Online | Microsoft Learn

Option 4: Microsoft Graph Data Connect (MGDC)

Finally, you have the option to use the Microsoft Graph Data Connect (MGDC) to get a list of all your sites. This request is created using the Azure Synapse and will deliver all sites at once to an Azure Storage account that you own.

The MGDC allows you to pull an entire dataset with a single request. Even if you have hundreds of thousands of sites, you will get a complete picture including every single site in your SharePoint tenant. You can automate this process (for instance, you can trigger it daily) and you will not be subject to throttling.

Here is an outline of the steps to make it happen:

- Provision an Approvers group (to approve each MGDC request) in Azure Active Directory

- Provision an Application Id (to run the MGDC requests) in Azure Active Directory

- Create an Azure Storage account (to store the extracted data), grant access to the application

- Enable MGDC in the Tenant Admin Portal and check the box to enable SharePoint datasets

- Use the Azure Synapse Copy Data tool to create and run a pipeline that reads from Microsoft 365 and writes to Azure Storage

- Use Power BI to show the data from Azure Storage in a report or dashboard.

Notes:

- You will need an Azure account to use this option

- The SharePoint data from MGDC will be 2 days old

- You will need two people to run a pipeline (one to trigger it and another to approve it)

Additional links:

- Overview of the Microsoft Graph Data Connect

- Links about SharePoint on MGDC

- Step-by-step: Gather a detailed dataset on SharePoint Sites using the Microsoft Graph Data Connect

- MGDC for SharePoint FAQ: What is the size of my sites?

Conclusion

As you can see, there are many Microsoft tools to get information about your sites in SharePoint Online. They each have different requirements and restrictions. I am working on option 4, which was introduced in Preview recently.

Do you use one of them? Do you use something else? Feel free to share it below.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.