- Home

- Data Architecture

- Data Architecture Blog

- Realtime analytics from SQL Server to Power BI with Debezium

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

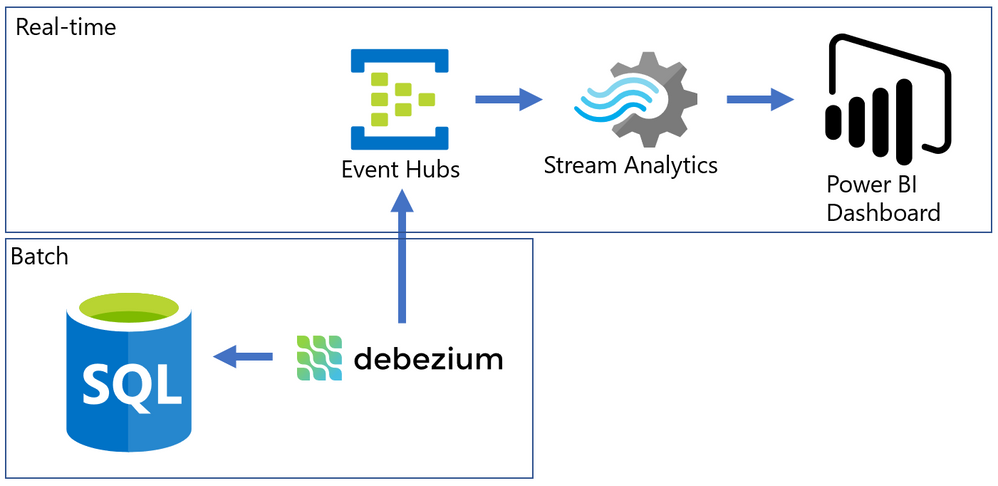

Almost every modern data warehouse project touches the topic of real-time data analytics. In many cases, the source systems use a traditional database, just like SQL Server, and they do not support event-based interfaces.

Common solutions for this problem often require a lot of coding, but I will present an alternative that can integrate the data from SQL Server Change Data Capture to a Power BI Streaming dataset with the good help of an Open-Source tool named Debezium.

The Problem

SQL Server is a batch-oriented service, just like any DBMS. This means that one program must query it to get the result – so to have real-time analytics we would have to change this batch behavior to a streaming /event/push behavior.

On the other side, we have Azure Event Hubs, Stream Analytics, and Streaming datasets on Power BI. They work pretty well together if the data source is a stream producing events (something we can have with a custom code application or some Open Source solution like Kafka).

The challenge here was to find something to make the bridge between SQL Server and Event Hubs.

After some time looking for solutions, I found this Docs page (Integrate Apache Kafka Connect on Azure Event Hubs with Debezium for Change Data Capture - Azure Eve...) with an approach to bring CDC data from Postgres to Event Hubs.

The solution presented on Docs was more complex than I needed, so I simplified it by using a container deployment and by removing unnecessary dependencies, then I wrote this post where I hope I can present it in a simpler way.

The solution looks like this: On one side, we have SQL Server with CDC enabled in a few tables, on the other we have Azure ready to process events that came to Event Hub. To make the bridge, we have Debezium that will create one event per row found on the CDC tables.

Have you ever used Docker?

For my development environment, I decided to go for Docker Desktop. The new WSL2 backend makes it easy to run Linux containers (such as those needed by Debezium). It works well on Windows 10 and recent builds of Windows Server (Semi-Annual Channel). If you still never tried WSL2, I highly recommend it. (See: Install Docker Desktop on Windows | Docker Documentation)

After a few steps, I have installed the WSL2 feature, chose the Ubuntu 20.04 distro (there other distros available on Windows Store), and finished the setup of Docker Desktop on my Windows 10 Surface Laptop :smiling_face_with_smiling_eyes:.

How to install a Debezium container?

Debezium has a Docker Image available on hub.docker.com, named “debezium\server”.

Debezium Server is a lightweight version that does NOT have Kafka installed. The image has already the connector you need for SQL Server and can output the events directly to Event Hubs.

To install and configure the container, I ran only this single line on PowerShell.

docker run -d -it --name SampleDebezium -v $PWD/conf:/debezium/conf -v $PWD/data:/debezium/data debezium/server

This will download the docker image “debezium/server” and start a container named “SampleDebezium”

We are mounting 2 folders from the host machine to the container:

/conf - Holds the configuration file.

/data - Will store the status of Debezium. This avoids missing or duplicate data once the container is recreated or restarted.

In my lab, I used the configuration file below (place it on the /conf folder named as application.properties). (Don’t worry about the keys here, I changed them already)

You will have to change the SQL Server and Event Hubs connections to match your environment.

Sample application.properties file:

debezium.sink.type=eventhubs

debezium.sink.eventhubs.connectionstring=Endpoint=sb://er-testforthisblogpost.servicebus.windows.net/;SharedAccessKeyName=TestPolicy;SharedAccessKey=O*&HBi8gbBO7NHn7N&In7ih/KgONHN=

debezium.sink.eventhubs.hubname=hubtestcdc

debezium.sink.eventhubs.maxbatchsize=1048576

debezium.source.connector.class=io.debezium.connector.sqlserver.SqlServerConnector

debezium.source.offset.storage.file.filename=data/offsets.dat

debezium.source.offset.flush.interval.ms=0

debezium.source.database.hostname=sqlserverhostname.westeurope.cloudapp.azure.com

debezium.source.database.port=1433

debezium.source.database.user=UserDemoCDC

debezium.source.database.password=demo@123

debezium.source.database.dbname=TestCDC

debezium.source.database.server.name=SQL2019

debezium.source.table.include.list=dbo.SampleCDC

debezium.source.snapshot.mode=schema_only

debezium.source.max.queue.size=8192

debezium.source.max.batch.size=2048

debezium.source.snapshot.fetch.size=2000

debezium.source.query.fetch.size=1000

debezium.source.poll.interval.ms=1000

debezium.source.database.history=io.debezium.relational.history.FileDatabaseHistory

debezium.source.database.history.file.filename=data/FileDatabaseHistory.dat

Setting up the Change Data Capture

My SQL Server is hosted on Azure (but this is not a requirement) and to create a lab enviorement, I created a single table and enabled CDC on it by using this script:

-- Create sample database

CREATE DATABASE TestCDC

GO

USE TestCDC

GO

-- Enable the database for CDC

EXEC sys.sp_cdc_enable_db

GO

-- Create a sample table

CREATE TABLE SampleCDC (

ID int identity (1,1) PRIMARY KEY ,

SampleName nvarchar(255)

)

-- Role with privileges to read CDC data

CREATE ROLE CDC_Reader

-- =========

-- Enable a Table

-- =========

EXEC sys.sp_cdc_enable_table

@source_schema = N'dbo',

@source_name = N'SampleCDC',

@role_name = N'CDC_Reader', -- The user must be part of this role to access the CDC data

--@filegroup_name = N'MyDB_CT', -- A filegroup can be specified to store the CDC data

@supports_net_changes = 0 -- Debezium do not use net changes, so it is not relevant

-- List the tables with CDC enabled

EXEC sys.sp_cdc_help_change_data_capture

GO

-- Insert some sample data

INSERT INTO dbo.SampleCDC VALUES ('Insert you value here')

-- The table is empty

select * from SampleCDC

-- But it recorded CDC data

select * from [cdc].[dbo_SampleCDC_CT]

/*

-- Disable CDC on the table

--EXEC sys.sp_cdc_disable_table

--@source_schema = N'dbo',

--@source_name = N'SampleCDC',

--@capture_instance = N'dbo_SampleCDC'

*/

Debezium will query the latest changed rows on CDC based on its configuration file and create the events on Event Hub.

Event Hub and Stream Analytics

I created an Event Hub Namespace with a single Event Hub to hold this experiment. There is no special requirement for the event hub. The size will depend only on the volume of events your application will send to it.

Once it is done, we have to create a Shared Access Policy. The connection string is what you need to add to the Debezium application.properties file.

To consume the events and create the Power BI streaming dataset, I used Azure Stream Analytics.

Once the Stream Analytics job is created, we have to configure 3 things: Inputs, Outputs, and Query.

Inputs

Here is where you say what the stream analytics will listen to. Just create an Input for your Event Hub with the default options. Debezium will generate uncompressed JSON files encoded on UTF-8.

Outputs

Here is where we will configure the Power BI streaming dataset. But you first need to know on which Power BI Workspace it will stay.

On the left menu, click on Outputs and then Add -> Power BI.

The options “Dataset name” and “Table name” are what will be visible to Power BI.

To test, the “Authentication Mode” as “User Token” is a good one, but for production, better use “Managed Identity”

Query

Stream Analytics uses a query language very similar to T-SQL to handle the data that comes in a stream input.

Check this link to find more about it Stream Analytics Query Language Reference - Stream Analytics Query | Microsoft Docs

On the example, I’m just counting how many rows (events) were generated on the last 10 minutes FROM an input INTO an output. The names on the query must match the ones you defined in the prior steps.

Here it is in the text version:

SELECT

count(*) over( LIMIT DURATION (minute, 10)) as RowsLast10Minutes

INTO

[TestCDC]

FROM

[InputCDC]

Make it Run

If everything is correctly configured, we will be able to start our Stream Analytics and our Container.

Stream Analytics

Docker Desktop

Power BI

Once the Stream Analytics is started, we will go to the Power BI workspace and create a Tile based on the streaming dataset.

If you don’t have a Power BI Dashboard on your workspace, just create a new one.

On the Dashboard, add a Tile.

Click on Real-time data and Next.

The dataset with the name you chose on Stream Analytics should be visible here.

Select the Card visual and the column RowsLast10Minutes, click Next, and Apply.

It should be something like this if there is no data being inserted on the table.

Now it comes the fun time. If everything is configured correctly, we just have to insert data on our sample table and see it flowing to the dashboard!

Known problems

It seems when a big transaction happens (like an update on 200k rows), Debezium stops pointing that the message size was bigger than the maximum size defined from Event Hubs. Maybe there is a way to break it into smaller messages, maybe it is how it works by design. If the base (CDC) table is OLTP-oriented (small, single row operations), the solution seems to run fine.

References

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.