- Home

- Azure

- Analytics on Azure Blog

- RealTime Lakehouse with Apache Flink and Apache Iceberg

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

Lakehouse is an open architecture that combines the flexibility, cost-efficiency, and scale, this ensures enterprises have the latest data available for analytics consumption. Data-driven enterprises need to keep their back end and analytics systems in real-time sync with customer-facing applications. The impact of transactions, updates, and changes must reflect accurately through end-to-end processes, related applications, and transaction systems.

The real-time data processing scenario is especially important for financial services industries. For example, if an insurance, credit card, or bank customer makes a payment and then immediately contacts customer service, the customer support agent needs to have the latest information. Similar scenarios apply to retail, commerce, and healthcare sectors. Enabling these scenarios streamlines operations, leading to greater organizational productivity and increased customer satisfaction.

Apache Iceberg is an open table format for massive analytic datasets. Iceberg adds tables to Trino and Spark that use a high-performance format that works as a SQL table. Data can be written into Iceberg through Flink, and then the table is accessed through Spark, Flink, Trino, etc. Iceberg, designed to analyze massive data, is defined as a table format. The table format is between the computing and storage layers.

The table format is mainly used to manage the files in the storage system downwards and provide corresponding interfaces for the compute. Iceberg supports read-write separation, concurrent read, incremental read, merging small files, and seconds to minutes of latency. Based on these advantages, we try to use Iceberg to build a Flink-based real-time data warehouse architecture featuring real-time comprehensive-procedure and stream-batch processing.

Lakehouse represents a transformative approach to data management, merging the best attributes of data lakes and traditional data warehouses. Lakehouse combines data lake scalability and cost-effectiveness with data warehouse reliability, structure, and performance. Three key technologies—Delta Lake, Apache Hudi, and Apache Iceberg—play pivotal roles in the Lakehouse ecosystem.

With HDInsight on AKS, the lakehouse architecture can be realized smoothly as all the compute, querying components are on the same compute pool on AKS and they can be elastic based on the usage.

As Iceberg supports Streaming Read, Flink on HDInsight on AKS can also be directly connected to perform batch processing or stream computing tasks. The intermediate results are further calculated and then output to the downstream systems.

It has been noted across other open-source ecosystems come with limitations such as only T+1 data timeliness guaranteed; Cloud Object Storage disconnection, row-based storage causing inadequate analysis capability; Apache Iceberg aims to solve these problems.

Here are some of the industry scenarios on how enterprises can realize value of Flink and Lakehouses.

Real-time Data Pipelines

As data-driven applications demand real-time insights, the duo of Apache Iceberg catalogs, Apache Flink, present a compelling solution for building a robust real-time lakehouse architecture. This will help with seamless schema evolution, and comprehensive metadata management.

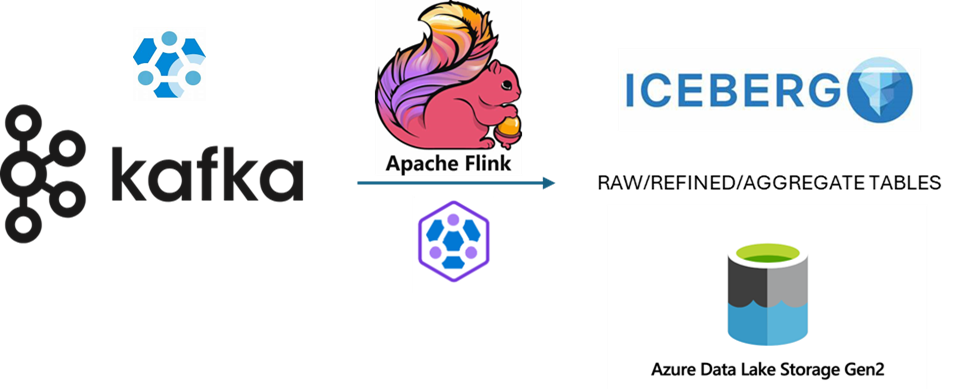

In an enterprise context, when large amount of log data is imported into message queues like Apache Kafka; when the Flink streaming engine completes the transformation operations that data can be loaded into an Apache Iceberg. However, there are several cases when the data needs to be curated from raw to aggregated tables. In such cases, a new Flink job can be created to consume the incremental data from the Iceberg table, with further transformation and curation the data can be written in same format into a refined table and an incremental Flink job can write into a final aggregate table in the open format like iceberg. With the ability of Iceberg to support low latency incremental data writes, it can improve overall real-time nature of the log analysis for the enterprise. The data is fresh and is always available for analysis or downstream apps which can access the incremental updated data continuously.

Here are a few interesting talks on Streaming Ingestion into Apache Iceberg & Streaming Event-Time Partitioning with Apache Flink and Apache Iceberg

Analyzing log data from relational databases

Apache Flink supports CDC data parsing natively, you can read more about this on our previous blog Performing Change Data Capture (CDC) of MySQL tables using FlinkSQL - Microsoft Community Hub. After the log data is parsed, it is coverted to always ready for next level of real time computing for all the types of messages (INSERT, DELETE, UPDATE_BEFORE, and UPDATE_AFTER). Flink Runtime can recognize these messages. Apache Iceberg has fully implemented the equality delete feature. When a user defined the to-be-deleted records, they can be written directly on iceberg to delete the corresponding rows. Users can realize the streaming deletion on Lake houses. With the CDC data ready in Iceberg, other compute engines in HDInsight on AKS cluster pool such as Spark, Trino can consume these from the storage, and can take advantage of the fresh data on Iceberg table in real-time.

Here is an interesting talk on Change Data Capture and Processing with Flink SQL

Stream-Batch unification

Flink's "Stream-Batch Unification" principle allows it to efficiently process both real-time (unbounded) and historical (bounded) data. This is particularly important in a data lakehouse setup, where real-time data ingestion and analysis can coexist with batch processing jobs. Flink's ability to handle high volumes of data, process real-time and batch data efficiently, and provide reliable and consistent data processing, aligns perfectly with the requirements of a data lakehouse.

With Flink and Iceberg, Streaming data can be written to the Iceberg format through Flink, and the incremental data in near real time can still be calculated through Flink. In the batch process, snapshots can be used to process them with Flink's batch capabilities. With the combination of both, the outcome can be analyzed by various personas as per their business needs. This reduces the maintenance and development costs of the entire system.

Here is an interesting talk from Flink Forward 2023 on The Vision and Practice of Stream and Batch Unification - LinkedIn's Story

Here is how you can get started:

- Running your first Apache Flink job with Azure HDInsight on AKS (microsoft.com)

- Table API and SQL - Use Iceberg Catalog type with Hive in Apache Flink® on HDInsight on AKS - Azure ...

- Get started today - https://aka.ms/starthdionaks

- Read our documentation - https://aka.ms/hdionaks-docs

- Join our HDInsight community page, share an idea on what you would like to see next? - https://aka.ms/hdionakscommunity

- Have a question on how to migrate or want to discuss a usecase - https://aka.ms/askhdinsight

In this azure sample, we are using a combination of Logging agent (Vector), Kafka on Azure HDInsight and Flink on Azure HDInsight on AKS to store logs in Iceberg format can provide a hugely cost effective and powerful logging solution.

We are super excited to get you started.

Apache, Apache Flink, Apache Iceberg, Apache Kafka, Kafka, Iceberg, Flink and the Flink, Kafka, Iceberg logo are trademarks of the Apache Software Foundation.

Apache® and Apache Flink® are either registered trademarks or trademarks of the Apache Software Foundation in the United States and/or other countries.

No endorsement by The Apache Software Foundation is implied by the use of these marks.

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.