- Home

- Artificial Intelligence and Machine Learning

- AI - Azure AI services Blog

- Unlocking Advanced Document Insights with Azure AI Document Intelligence

- Subscribe to RSS Feed

- Mark as New

- Mark as Read

- Bookmark

- Subscribe

- Printer Friendly Page

- Report Inappropriate Content

In the digital age, extracting insights from diverse documents is challenging due to their complex structure. Consider a financial analyst reviewing a company's quarterly report, which includes detailed tables of operating expenses, revenue, and charts depicting sales growth. Traditional document processing solutions often fall short in understanding the nuanced hierarchy of document structures and the contextual relevance of embedded figures, leading to inefficient data extraction, analysis, and utilization. This gap in capability not only hinders efficient data use, but also affects decision making and productivity. As organizations strive to leverage data as a strategic asset, the need for advanced document intelligence solutions that can accurately interpret and analyze the full spectrum of document elements, including the ability to interact with and question the information within figures and charts, has never been more critical.

To revolutionize how we interact with and derive insights from documents, Azure AI Document Intelligence is introducing groundbreaking features: hierarchical document structure analysis and figure detection.

Hierarchical document structure analysis

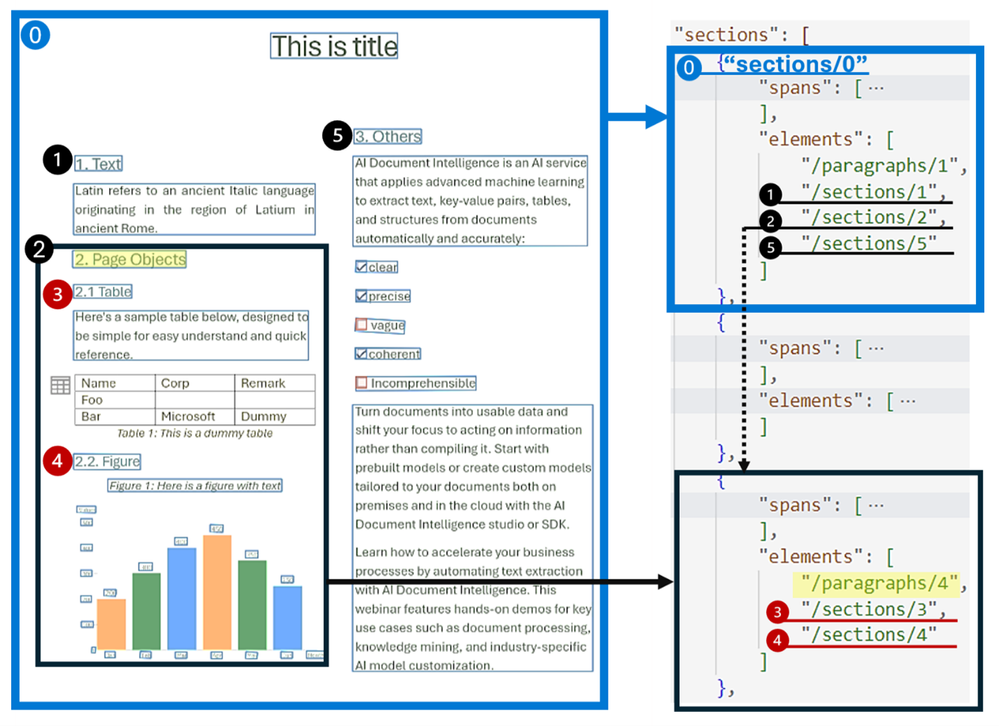

Hierarchical Document Structure Analysis is crucial for semantically segmenting documents into manageable sections, enhancing overall comprehension, facilitating easier navigation, and significantly improving information retrieval efficiency. The implementation of Retrieval Augmented Generation (RAG) in document generative AI highlights the importance of such a structured approach. By supporting multi-layers of sections and subsections, the Layout model identifies the relationships between different sections and the objects within each, maintaining a coherent hierarchical structure throughout. This structured output can be conveniently consumed in markdown format, allowing for straightforward access to and manipulation of sections and subsections. The figure demonstrates how sections are organized in the JSON output:

Figure 1. Illustration of hierarchical document structure analysis in JSON output

Figure detection

Figures enrich the textual content, offering visual representations that simplify the understanding of complex information. The Layout model's figure detection feature comes with key properties such as boundingRegions, which detail the spatial locations of figures across document pages. This includes page numbers and polygon coordinates outlining each figure's boundary. You can use this info to extract the figure or chart and make it an addressable component that can be further processed. Additionally, spans and elements properties link figures to their relevant

textual contexts, making it easier to understand the connection between text and visual data. The presence of a caption property further enhances this by providing descriptive text for each figure, ensuring that users can grasp the full context and significance of visual elements within a document.

Figure 2. Illustration of figure detection in JSON output

Advanced document processing with Azure AI Document Intelligence and Azure OpenAI service

This sample notebook demonstrates how combining hierarchical document structure analysis and figure detection with the Azure OpenAI GPT-4 Turbo with Vision (GPT-4V) model enables the extraction of advanced insights from documents.

Figure 3. Workflow to extract advanced document insights.

The process begins with the identification of different sections of a document, such as text blocks, page objects like tables, and figures. Azure AI's sophisticated algorithms analyze the hierarchical structure of the document, ensuring that each section and subsection is accurately identified and that their interrelationships are preserved. This analysis results in the generation of markdown output that reflects the document's structure, facilitating easy navigation and editing.

Next, the workflow showcases how to crop the detected figures based on their bounding regions, then send both figure body and caption to GPT-4V model for figure understanding. In this example, the GPT-4V model will return the description of the bar chart. This detailed description provides users with a textual representation of the figure's content, which is crucial for understanding the data visually presented in the document.

In the enhanced markdown output, the figure content section has been elevated from merely representing the text detected within the figure to encompassing a comprehensive description provided by GPT-4V. This enriched output now encapsulates a semantic interpretation of the figure, allowing for a more nuanced understanding of the visual data. With this refined information, the markdown output becomes an even more potent asset when applied to the RAG sample notebook, facilitating more precise and contextually aware document-based Q&A interactions.

Get started

Azure AI Document Intelligence Studio

- Navigate to Document Intelligence Studio - Microsoft Azure, select or upload a document (PDF, image, Office, or HTML file), and then specify the Analyze options:

- Click on Run analysis and view the output content, sample code on the right pane:

SDK and REST API

- Quickstart: Document Intelligence (formerly Form Recognizer) SDKs - Azure AI services | Microsoft Le... – use your preferred SDK or REST API to extract content and structure from documents.

- Use Layout model to output in markdown format with Python or .NET SDK.

Build “chat with your document” with semantic chunking

- This cookbook shows a simple demo for RAG pattern with Azure AI Document Intelligence as document loader and Azure Search as retriever in LangChain. You can now use the enhanced markdown output with figure understanding to connect with MarkdownHeaderTextSplitter in LangChain for more accurate semantic chunking.

- This solution accelerator demonstrates an end-to-end baseline RAG pattern sample that uses Azure AI Search as a retriever and Azure AI Document Intelligence for document loading and semantic chunking.

Learn more

You must be a registered user to add a comment. If you've already registered, sign in. Otherwise, register and sign in.